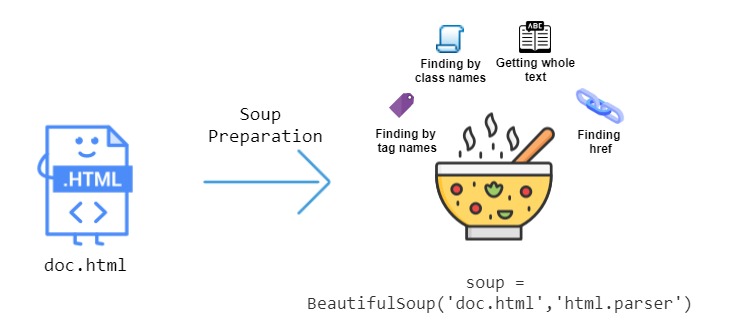

5 Creative Uses for Python's Soup Object

Python's Soup object, provided by the BeautifulSoup library, is an incredibly versatile tool for web scraping and data extraction. While it's commonly used for these purposes, the Soup object's capabilities extend far beyond simple data retrieval. In this article, we will explore five creative and unconventional uses for the Soup object, showcasing its potential as a powerful tool for various tasks.

1. Automated Image Downloading and Organization

One of the more straightforward yet useful applications of the Soup object is automating the downloading and organizing of images from websites. By parsing HTML with the Soup object, you can extract image links, download them, and save them to a specified directory. This can be particularly handy for collecting large sets of images for data analysis or even artistic purposes.

For example, imagine you're a wildlife photographer interested in capturing the diversity of bird species in your region. You could use the Soup object to scrape images from online birdwatching forums, downloading and organizing them by species. This not only saves you time but also provides a comprehensive dataset for further analysis or even training machine learning models.

Here's a simplified code snippet to demonstrate this process:

import requests

from bs4 import BeautifulSoup

def download_images(url, save_dir):

response = requests.get(url)

soup = BeautifulSoup(response.text, 'html.parser')

image_links = soup.find_all('img')

for image in image_links:

image_url = image['src']

response = requests.get(image_url)

with open(f"{save_dir}/{image_url.split('/')[-1]}", 'wb') as file:

file.write(response.content)

download_images('https://birdwatchingforum.com/forum/species', '/path/to/save/directory')

2. Automated Bookmarks and Content Curation

The Soup object can also be used to automate the process of bookmarking and curating content from the web. By extracting specific elements from web pages, such as article titles, summaries, and URLs, you can create a personalized library of interesting content.

Consider a scenario where you're researching a particular topic for an upcoming project. With the Soup object, you can scrape relevant articles from various websites, extract key information, and save it to a local database. This way, you can easily access and review the curated content at your convenience, without the clutter of browsing through multiple tabs or bookmarks.

Below is a code example demonstrating this process:

import requests

from bs4 import BeautifulSoup

def curate_content(url):

response = requests.get(url)

soup = BeautifulSoup(response.text, 'html.parser')

articles = soup.find_all('article')

for article in articles:

title = article.find('h2').text

summary = article.find('p').text

url = article['href']

# Save to local database or file

with open('curated_content.txt', 'a') as file:

file.write(f"Title: {title}\nSummary: {summary}\nURL: {url}\n\n")

curate_content('https://example.com/research-topic')

3. Dynamic Content Generation for Web Development

The Soup object’s ability to parse and extract data from HTML can be leveraged to dynamically generate content for web development. By analyzing the structure of existing web pages, you can create templates or frameworks that automatically populate content based on the data provided.

For instance, imagine you're building a dynamic blog platform. With the Soup object, you can analyze the structure of a sample blog post, identify the different elements (such as headings, paragraphs, images, and captions), and create a template that can be populated with new content. This approach not only speeds up development but also ensures a consistent and structured presentation of content across your platform.

The following code snippet illustrates how you might generate a basic blog post template:

from bs4 import BeautifulSoup

def generate_blog_post_template(soup):

post_elements = soup.find_all(['h2', 'p', 'img'])

template = ""

for element in post_elements:

if element.name == 'h2':

template += f"{element.text}

\n"

elif element.name == 'p':

template += f"{element.text}

\n"

elif element.name == 'img':

template += f" \n"

return template

# Example usage

with open('sample_post.html', 'r') as file:

soup = BeautifulSoup(file.read(), 'html.parser')

template = generate_blog_post_template(soup)

print(template)

\n"

return template

# Example usage

with open('sample_post.html', 'r') as file:

soup = BeautifulSoup(file.read(), 'html.parser')

template = generate_blog_post_template(soup)

print(template)

4. Data Cleaning and Preprocessing for Machine Learning

When working with web-scraped data, preprocessing and cleaning the data is often a crucial step before it can be used for machine learning or analysis. The Soup object can be a valuable tool in this process, helping to standardize and clean extracted data.

Consider a scenario where you've scraped product reviews from an e-commerce website. The reviews might contain HTML tags, special characters, or even images. With the Soup object, you can parse the reviews, remove unwanted elements, and clean the text to ensure it's suitable for analysis or training machine learning models.

Here's a simplified code example for cleaning and preprocessing web-scraped text data:

from bs4 import BeautifulSoup

import re

def clean_text(text):

# Remove HTML tags

soup = BeautifulSoup(text, 'html.parser')

clean_text = soup.get_text()

# Remove special characters and extra spaces

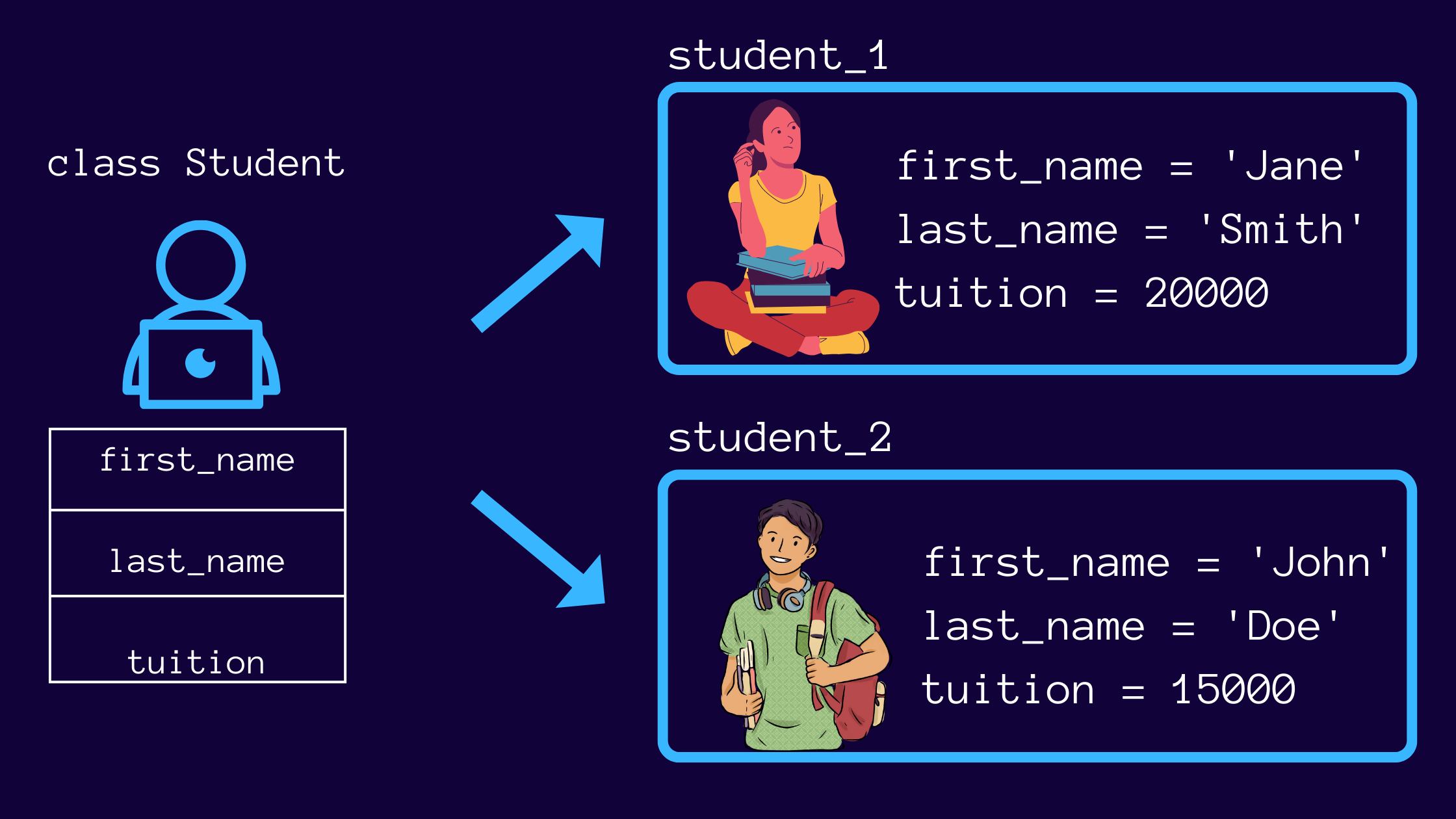

clean_text = re.sub(r'[^a-zA-Z0-9\s]', '', clean_text)

clean_text = re.sub(r'\s+', ' ', clean_text)

return clean_text

# Example usage

raw_text = "This is a sample review.

It has two paragraphs.

"

clean_text = clean_text(raw_text)

print(clean_text)

5. Automated News Summarization and Analysis

The Soup object can also be employed to automatically summarize and analyze news articles, providing a quick overview of the latest developments in various fields. By parsing the HTML structure of news articles, you can extract key information, such as headlines, summaries, and dates, and perform further analysis.

For instance, imagine you're interested in staying updated with the latest developments in technology. By scraping and summarizing tech news articles, you can create a daily or weekly digest, highlighting the most important stories. This can be particularly useful for professionals who need to stay informed but don't have the time to read through extensive articles.

The following code snippet demonstrates how you might summarize a news article using the Soup object:

import requests

from bs4 import BeautifulSoup

def summarize_news(url):

response = requests.get(url)

soup = BeautifulSoup(response.text, 'html.parser')

# Extract headline and summary

headline = soup.find('h1').text

summary = soup.find('p').text

# Extract date and other metadata

date_element = soup.find('time', {'itemprop': 'datePublished'})

date = date_element['datetime'] if date_element else 'N/A'

# Perform further analysis or save to a database

print(f"Headline: {headline}\nSummary: {summary}\nDate: {date}")

summarize_news('https://example.com/latest-tech-news')

How does the Soup object work under the hood?

+The Soup object, provided by the BeautifulSoup library, is a powerful tool for parsing HTML and XML documents. It uses a tree-based parser, which means it treats the document as a tree structure, with elements as nodes and their attributes and child elements as branches. This allows for efficient navigation and extraction of data from the document.

Are there any legal or ethical considerations when using the Soup object for web scraping?

+Yes, it’s important to be aware of the legal and ethical implications of web scraping. While web scraping is generally legal, certain practices like scraping private or protected data, violating terms of service, or overwhelming a website with requests can be considered unethical or even illegal. Always ensure you have the necessary permissions and respect the website’s policies when scraping data.

Can the Soup object be used with other programming languages besides Python?

+While BeautifulSoup is primarily designed for Python, there are similar libraries available for other programming languages. For instance, in JavaScript, you can use libraries like Cheerio, which provides a similar API for parsing HTML. In other languages like Java or Ruby, there are libraries like Jsoup and Nokogiri, respectively, which offer similar functionality.