3 Ways to Optimize GPU Scheduling

GPU scheduling is a critical aspect of modern computing, especially in high-performance computing (HPC) and data-intensive applications. With the increasing demand for parallel processing and the growing popularity of machine learning and artificial intelligence, optimizing GPU scheduling has become essential to maximize resource utilization and achieve efficient performance.

In this article, we will delve into three effective strategies to enhance GPU scheduling, ensuring optimal performance and resource management. By implementing these techniques, organizations and developers can unlock the full potential of their GPU infrastructure and drive innovative solutions across various domains.

1. Task Prioritization and Load Balancing

Efficient GPU scheduling relies on intelligent task prioritization and load balancing mechanisms. By analyzing the characteristics and computational requirements of tasks, schedulers can make informed decisions to allocate resources effectively.

One approach is to employ a priority-based scheduling algorithm that assigns higher priority to tasks with stricter time constraints or critical performance requirements. This ensures that crucial tasks are executed first, minimizing potential bottlenecks and maximizing overall system throughput.

Load balancing is another crucial aspect. Modern GPU systems often consist of multiple GPUs, and distributing tasks evenly across these resources is essential to avoid imbalances and ensure optimal utilization. Load balancing algorithms can dynamically adjust task assignments based on GPU availability, workload characteristics, and historical performance data.

For example, consider a data center with 16 GPUs running various machine learning tasks. By implementing a load-balancing algorithm, the scheduler can efficiently distribute tasks across GPUs, ensuring that each GPU operates at its full capacity without overwhelming any specific resource. This approach maximizes resource utilization and improves overall system performance.

Strategies for Task Prioritization and Load Balancing

- Priority-based Scheduling: Implement a priority queue that sorts tasks based on their urgency or importance. Tasks with higher priority can be assigned to available GPUs first, ensuring timely execution.

- Dynamic Load Balancing: Use real-time performance metrics to monitor GPU utilization. If a particular GPU is heavily loaded, tasks can be redistributed to underutilized GPUs, maintaining a balanced workload across the system.

- Historical Data Analysis: Analyze past task execution patterns to predict future resource requirements. This allows the scheduler to proactively allocate resources based on historical trends, optimizing performance and minimizing idle time.

2. Adaptive Task Scheduling with GPU Performance Profiling

Understanding the performance characteristics of tasks and GPUs is crucial for effective scheduling. By profiling GPU performance, schedulers can gain valuable insights into task execution times, resource utilization, and potential bottlenecks.

GPU performance profiling involves running representative tasks and measuring various performance metrics such as execution time, memory bandwidth, and GPU utilization. This data can then be used to optimize task scheduling by matching tasks with suitable GPUs and resource configurations.

For instance, consider a machine learning model training process where different tasks exhibit varying memory requirements. By profiling the tasks, the scheduler can identify memory-intensive operations and allocate GPUs with sufficient memory bandwidth to ensure efficient execution. This adaptive scheduling approach improves overall system performance and reduces the risk of memory-related bottlenecks.

Key Techniques for Adaptive Task Scheduling

- Performance Profiling: Run representative tasks and collect performance metrics such as execution time, memory access patterns, and GPU utilization. Use this data to create performance profiles for different tasks.

- GPU Selection: Based on the performance profiles, assign tasks to GPUs with optimal resource configurations. For example, memory-intensive tasks can be scheduled on GPUs with higher memory bandwidth, while compute-intensive tasks can be allocated to GPUs with higher computational capabilities.

- Dynamic Adaptation: Continuously monitor GPU performance during task execution. If a task experiences performance degradation or bottlenecks, the scheduler can dynamically adjust resource allocation or offload the task to a different GPU to optimize performance.

3. GPU Clustering and Collaborative Scheduling

In large-scale computing environments with multiple GPU nodes, collaborative scheduling can further enhance resource utilization and performance.

GPU clustering involves grouping multiple GPUs together to form a collaborative computing unit. By treating a cluster of GPUs as a single resource, schedulers can optimize task placement and resource allocation across the entire cluster.

Collaborative scheduling algorithms consider the interdependencies and communication patterns of tasks. By intelligently scheduling tasks across GPU clusters, the system can minimize data transfer overhead and maximize computational efficiency. This approach is particularly beneficial for distributed computing applications and machine learning frameworks that require intensive inter-GPU communication.

Benefits of GPU Clustering and Collaborative Scheduling

- Efficient Resource Utilization: GPU clustering allows for better load balancing across the entire system, ensuring that all resources are utilized optimally.

- Reduced Communication Overhead: By scheduling tasks with similar communication patterns on the same cluster, the system can minimize data transfer between GPUs, improving overall performance.

- Scalability: Collaborative scheduling enables seamless scaling of computing resources as the demand for parallel processing increases. As more GPUs are added to the cluster, the scheduler can dynamically adjust task assignments to maintain efficient resource utilization.

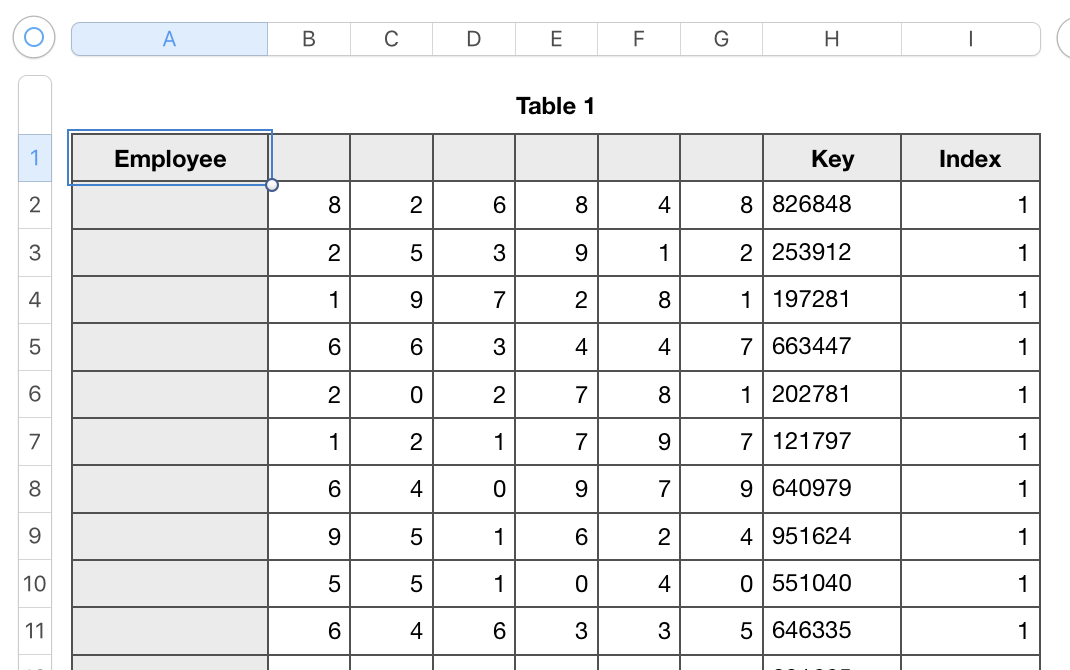

| Strategy | Key Benefits |

|---|---|

| Task Prioritization and Load Balancing | Maximizes resource utilization, reduces bottlenecks, and improves overall system throughput. |

| Adaptive Task Scheduling with GPU Performance Profiling | Optimizes task placement based on performance characteristics, reducing memory bottlenecks and improving computational efficiency. |

| GPU Clustering and Collaborative Scheduling | Enhances resource utilization in large-scale environments, minimizes communication overhead, and enables seamless scalability. |

FAQ

What are the key challenges in GPU scheduling?

+GPU scheduling faces challenges such as load balancing, task prioritization, and efficient resource allocation. These challenges require sophisticated algorithms and profiling techniques to ensure optimal performance.

How does task prioritization improve GPU scheduling?

+Task prioritization ensures that critical or time-sensitive tasks are executed first, minimizing delays and maximizing system throughput. It helps prioritize tasks based on their importance, ensuring efficient resource utilization.

What are the benefits of GPU performance profiling in scheduling?

+GPU performance profiling provides valuable insights into task execution times and resource requirements. By understanding these characteristics, schedulers can make informed decisions to optimize task placement and resource allocation.