Automate Your Databricks Workflows

In today's data-driven world, organizations are constantly seeking ways to streamline their processes and unlock the full potential of their data. One powerful tool that has emerged as a game-changer is Databricks, a unified data analytics platform. Databricks offers a collaborative and scalable environment for data engineering, data science, and data analytics, enabling businesses to accelerate their data journey. In this article, we will delve into the world of Databricks workflows and explore how automation can revolutionize your data processing and analysis.

Understanding Databricks Workflows

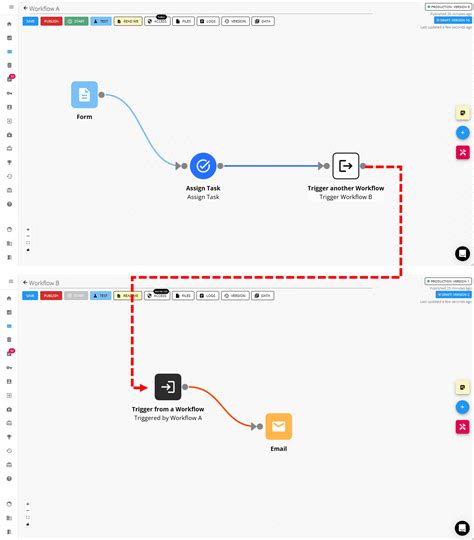

Databricks Workflows is a feature-rich tool that allows data professionals to design, execute, and manage complex data pipelines. It provides a visual canvas to construct workflows, making it intuitive and user-friendly. With Databricks Workflows, you can define a series of interconnected tasks, each representing a specific data operation or transformation. These tasks can be arranged in a logical sequence, ensuring that your data flows smoothly through the entire pipeline.

One of the key advantages of Databricks Workflows is its ability to handle a wide range of data operations. From data ingestion and cleaning to complex transformations and machine learning model training, Databricks Workflows can accommodate various data processing needs. Additionally, it offers robust scheduling capabilities, allowing you to automate your workflows to run at specific intervals or in response to events.

Benefits of Databricks Workflows

- Streamlined Data Processing: Databricks Workflows simplifies the process of designing and executing data pipelines. With its visual interface, you can easily connect different tasks and visualize the flow of your data, ensuring a clear and organized approach.

- Scalability and Performance: Built on the Apache Spark framework, Databricks Workflows leverages the power of distributed computing. This means your workflows can scale effortlessly to handle large volumes of data, ensuring optimal performance and efficient resource utilization.

- Collaborative Environment: Databricks Workflows promotes collaboration among data teams. Multiple users can work simultaneously on different tasks within the same workflow, fostering a cohesive and efficient workflow development process.

- Version Control and Reproducibility: Databricks Workflows integrates seamlessly with version control systems like Git, allowing you to track changes, roll back to previous versions, and ensure reproducibility of your data pipelines. This is particularly valuable when collaborating with a team or when changes need to be audited.

| Key Feature | Description |

|---|---|

| Visual Workflow Designer | A user-friendly interface to create and manage workflows. |

| Task Dependency Management | Define task dependencies to ensure proper execution order. |

| Dynamic Task Scheduling | Schedule tasks based on specific conditions or events. |

| Data Lineage Tracking | Trace data flow and understand the origin of data artifacts. |

| Error Handling and Monitoring | Monitor workflow execution, handle errors, and receive notifications. |

Automating Databricks Workflows

Automation is the key to unlocking the full potential of Databricks Workflows. By automating your workflows, you can achieve faster, more efficient data processing, reduce manual errors, and focus on high-value tasks. Here are some strategies to automate your Databricks Workflows effectively:

Implementing Scheduling and Triggers

Databricks Workflows offers flexible scheduling options to automate the execution of your workflows. You can schedule workflows to run at regular intervals, such as hourly, daily, or weekly. Additionally, you can trigger workflows based on specific events or conditions. For example, you can set up a workflow to run whenever new data is added to a particular data source, ensuring timely and automatic processing.

The scheduling and triggering capabilities of Databricks Workflows enable you to create dynamic and responsive data pipelines. Whether you need to process data in real-time or at specific intervals, automation ensures that your workflows run without manual intervention.

Utilizing Parameterized Tasks

Parameterized tasks are a powerful feature in Databricks Workflows. These tasks allow you to define variables that can be dynamically updated during workflow execution. By using parameters, you can create reusable and flexible workflows that adapt to different scenarios.

For example, imagine you have a data cleaning workflow that needs to handle various datasets. Instead of creating separate workflows for each dataset, you can use parameters to specify the input dataset dynamically. This approach saves time and effort, as you can reuse the same workflow for multiple datasets with minimal adjustments.

Integrating with CI/CD Pipelines

Continuous Integration and Continuous Deployment (CI/CD) pipelines are essential for modern software development. Databricks Workflows seamlessly integrates with popular CI/CD tools, allowing you to automate the deployment and testing of your data pipelines.

By integrating Databricks Workflows with CI/CD pipelines, you can ensure that your data workflows are consistently built, tested, and deployed. This integration streamlines the development process, reduces the risk of errors, and enables rapid iteration and deployment of data-driven applications.

Automating Workflow Monitoring and Alerts

To ensure the smooth operation of your automated workflows, it’s crucial to monitor their execution and receive alerts in case of any issues. Databricks Workflows provides robust monitoring capabilities, allowing you to track the progress of your workflows and receive notifications for specific events.

You can set up alerts to notify you or your team when a workflow fails, encounters errors, or takes longer than expected. This proactive approach helps you identify and resolve issues promptly, maintaining the reliability and efficiency of your automated workflows.

Real-World Use Cases and Benefits

The automation of Databricks Workflows has proven to be a game-changer for numerous organizations across various industries. Let’s explore some real-world use cases and the benefits they bring:

Financial Services: Fraud Detection and Prevention

In the financial industry, timely and accurate fraud detection is crucial. Databricks Workflows can automate the entire fraud detection process, from data ingestion and feature engineering to model training and deployment. By automating this workflow, financial institutions can quickly identify suspicious activities and take immediate action, minimizing potential losses.

Healthcare: Clinical Trial Analysis

Healthcare organizations often deal with vast amounts of data during clinical trials. Databricks Workflows can automate the data processing and analysis pipeline, ensuring efficient and standardized data handling. This automation streamlines the analysis process, accelerates trial results, and enables healthcare professionals to make data-driven decisions more rapidly.

Retail: Personalized Marketing Campaigns

Retailers can leverage Databricks Workflows to automate the creation of personalized marketing campaigns. By integrating customer data, purchase history, and behavioral patterns, retailers can design targeted campaigns. Automating this workflow allows retailers to quickly adapt their marketing strategies, improving customer engagement and sales.

Energy Sector: Predictive Maintenance

In the energy industry, predictive maintenance is essential for optimizing equipment performance and reducing downtime. Databricks Workflows can automate the entire predictive maintenance process, from data collection and preprocessing to model training and deployment. This automation enables energy companies to proactively identify potential equipment failures, improving operational efficiency and reducing costs.

Conclusion

Databricks Workflows, combined with automation, offers a powerful solution for organizations seeking to streamline their data processing and analysis. By implementing scheduling, parameterized tasks, CI/CD integration, and monitoring, you can unlock the full potential of your data pipelines. The benefits of automation are vast, from increased efficiency and reduced manual errors to faster time-to-market for data-driven applications.

As more industries embrace the power of data, Databricks Workflows will continue to play a pivotal role in enabling organizations to extract valuable insights and make data-driven decisions. Embrace the future of data analytics with Databricks Workflows and automation, and unlock the full potential of your data.

How does Databricks Workflows compare to traditional ETL tools?

+Databricks Workflows offers a more comprehensive and collaborative approach to data processing compared to traditional ETL (Extract, Transform, Load) tools. It provides a visual workflow designer, robust scheduling capabilities, and seamless integration with data engineering and data science tools. Databricks Workflows also excels in handling large-scale data processing and complex transformations, making it a powerful alternative to traditional ETL solutions.

What are the key benefits of automating Databricks Workflows?

+Automating Databricks Workflows brings several advantages. It reduces manual errors, saves time and resources, and ensures consistent and reliable data processing. Automation also enables faster iteration and deployment of data pipelines, allowing organizations to stay agile and responsive to changing business needs.

Can Databricks Workflows handle real-time data processing?

+Yes, Databricks Workflows can handle real-time data processing. By leveraging Apache Spark’s streaming capabilities, Databricks Workflows can process data as it arrives, making it suitable for scenarios that require immediate data analysis and decision-making.

How does Databricks Workflows ensure data security and privacy?

+Databricks Workflows prioritizes data security and privacy. It offers robust authentication and authorization mechanisms, ensuring that only authorized users can access and modify workflows. Additionally, Databricks provides encryption at rest and in transit, protecting sensitive data during processing and storage.