Consolidate Duplicates: A Quick Guide

In the world of data management and organization, consolidating duplicates is an essential task to maintain efficiency and accuracy. With large datasets and complex systems, duplicate entries can cause significant issues, leading to potential errors and hindering effective decision-making. This guide aims to provide a comprehensive overview of the process, offering practical insights and strategies to streamline the consolidation of duplicates.

Understanding the Impact of Duplicates

Duplicate entries in databases or spreadsheets can arise from various sources, including human error, system glitches, or data integration from multiple sources. While a single duplicate may seem insignificant, the cumulative effect can be detrimental. Consider the following potential consequences:

- Data Inconsistency: Duplicates can lead to conflicting information, making it challenging to identify the correct and up-to-date records.

- Inefficient Resource Allocation: Duplicate entries waste valuable storage space and computational resources, impacting system performance.

- Misinformed Decision-Making: Relying on duplicate data can skew analysis and lead to flawed conclusions, affecting business strategies.

- Customer Experience Issues: Inaccurate or incomplete customer data due to duplicates can result in poor service and lost opportunities.

Strategies for Effective Consolidation

Consolidating duplicates requires a systematic approach tailored to the specific dataset and its characteristics. Here are some strategies to tackle this task effectively:

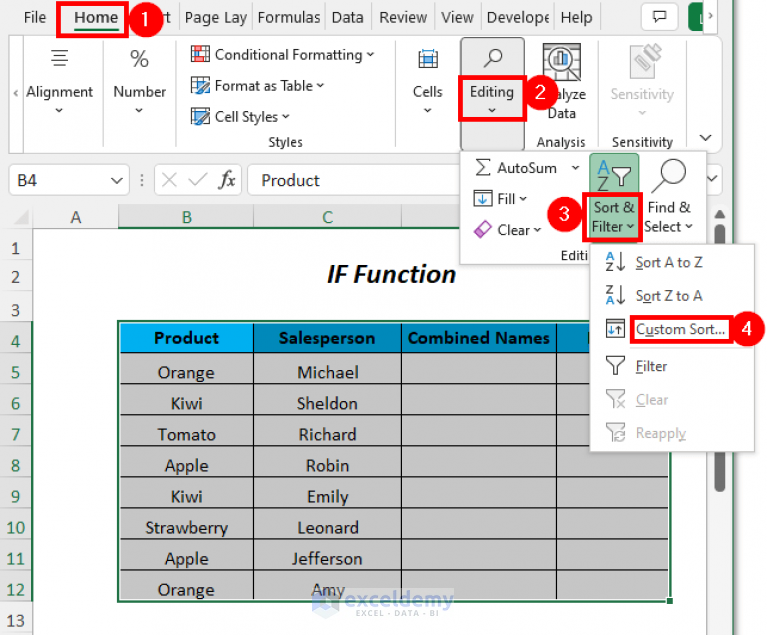

1. Identify and Flag Duplicates

The first step is to develop a robust process to identify potential duplicates. This can be done manually for smaller datasets or through automated tools for larger ones. Look for unique identifiers or common attributes that can help pinpoint duplicates. Once identified, flag them for further analysis.

| Identifier | Duplicate Count |

|---|---|

| Email Address | 25 |

| Phone Number | 18 |

| Customer ID | 12 |

💡 Consider using data visualization tools to spot patterns and clusters that may indicate duplicate entries.

2. Analyze and Prioritize

Not all duplicates are created equal. Some may be easy to consolidate, while others require careful consideration. Prioritize the duplicates based on their potential impact and the ease of consolidation. For instance, duplicates with complete and accurate information may take precedence over those with missing data.

3. Merge or Remove

The next step is to decide whether to merge the duplicate entries into a single, complete record or remove one of the duplicates entirely. Merging is ideal when the duplicates contain complementary information, ensuring no data loss. However, if one duplicate is more accurate or complete, it may be better to remove the other.

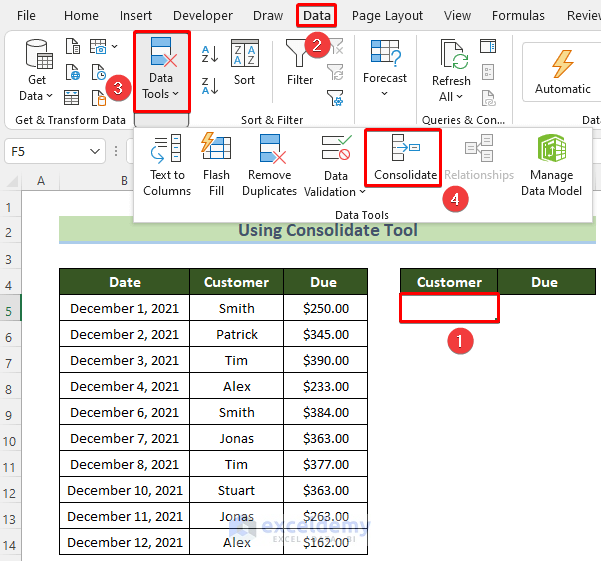

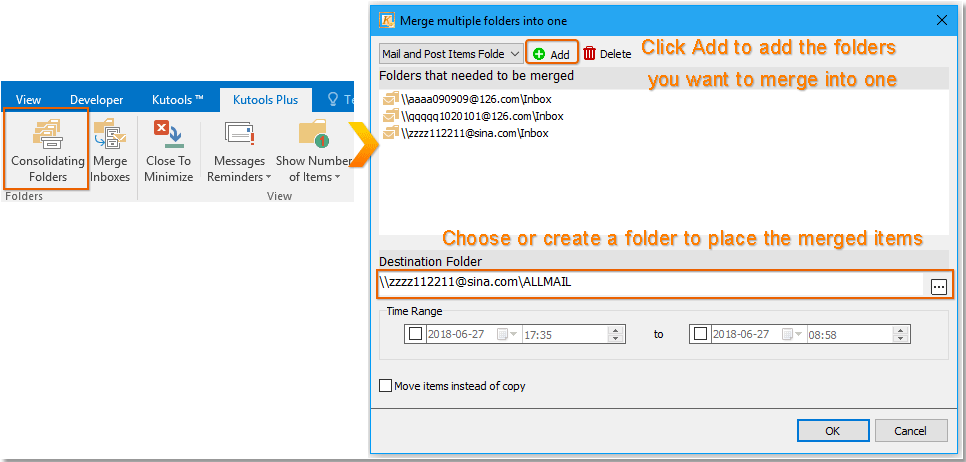

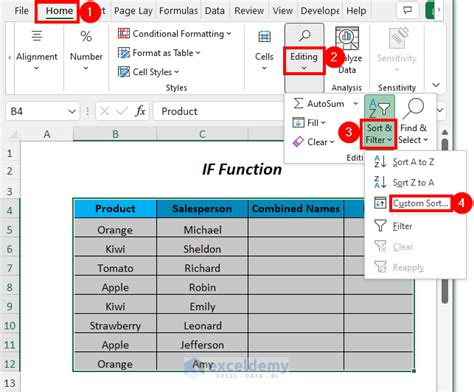

4. Implement Automation

To streamline the consolidation process, leverage automation tools and techniques. These tools can compare records, identify similarities, and suggest consolidation actions. Automation can significantly reduce manual effort and potential errors, especially for large datasets.

5. Establish Standard Procedures

Duplicates can arise from various sources, so it’s crucial to establish standard procedures to prevent their occurrence. This may involve implementing data validation checks, enforcing data entry guidelines, and regularly auditing the system for potential duplicates.

Best Practices for Accurate Consolidation

Ensuring accurate consolidation is critical to maintaining data integrity. Here are some best practices to consider:

- Regularly Audit for Duplicates: Schedule periodic audits to identify and consolidate duplicates, preventing their accumulation.

- Maintain Version Control: Keep a record of changes made during consolidation, especially when merging records, to ensure traceability.

- Use Advanced Matching Techniques: Employ sophisticated matching algorithms or machine learning to identify duplicates with subtle differences.

- Collaborate with Data Owners: Engage with stakeholders and data owners to understand the context and ensure accurate consolidation.

Future Implications and Trends

As data continues to grow exponentially, the challenge of duplicate consolidation will persist. However, advancements in technology offer promising solutions. Artificial intelligence and machine learning algorithms can automate the identification and consolidation process, improving accuracy and efficiency. Additionally, blockchain technology’s immutable nature could prevent duplicate entries from occurring in the first place.

In the evolving landscape of data management, staying updated with these technological advancements and adopting best practices will be crucial to maintaining a clean and reliable dataset.

What are some common causes of duplicate entries in a dataset?

+

Duplicate entries can arise from various sources, including human error during data entry, system glitches, data migration from multiple sources, and lack of proper validation checks. Additionally, data from external partners or customers may introduce duplicates if not properly cleaned.

How can I identify duplicates in a large dataset efficiently?

+

For large datasets, using automated tools that employ advanced algorithms for record comparison is highly efficient. These tools can identify duplicates based on various attributes and suggest consolidation actions. Regularly running these tools as part of your data maintenance process is recommended.

What should I consider when deciding whether to merge or remove duplicate entries?

+

The decision to merge or remove duplicates depends on the nature of the data and its impact. Merging is ideal when both duplicates contain valuable information and you want to retain all details. Removal is suitable when one duplicate is more accurate or complete, and retaining both would cause confusion. Consider the context and potential consequences of each option.

Are there any potential risks associated with consolidating duplicates automatically?

+

While automation can significantly streamline the consolidation process, it’s essential to exercise caution. Automated tools may not always capture subtle nuances or context, leading to incorrect consolidation. Regularly reviewing the automated results and implementing robust validation checks can mitigate these risks.

How can I prevent duplicate entries from occurring in the first place?

+

Preventing duplicates requires a multi-faceted approach. Implementing strict data validation checks during data entry, enforcing unique identifiers, and regular data audits can help. Additionally, educating users about the importance of data integrity and providing clear guidelines can reduce the occurrence of duplicates.